Filter

Introduction

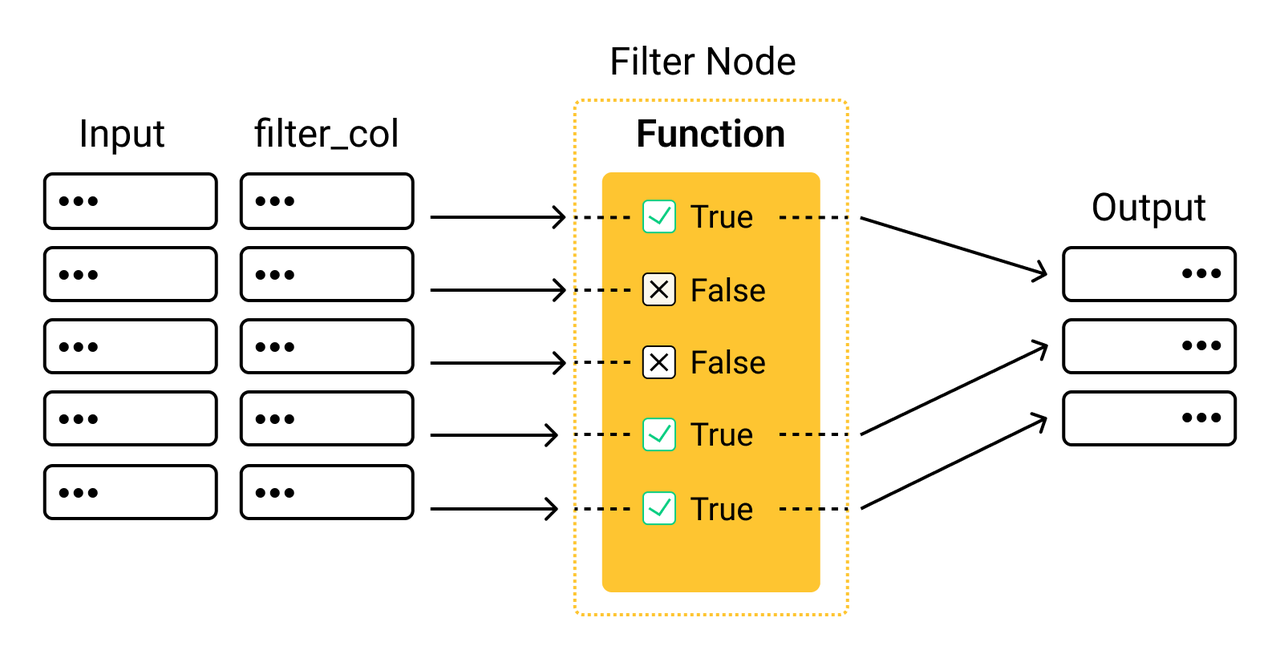

A filter node filters rows based on the return values (T/F) of a given function func and takes filter_columns as input. The transformation only takes effect on the columns specified by the input_schema. Refer to filter API for more details.

Since the filter node only filters the input data, the input and output of a filter node should be the same. The function in the filter node takes filter_columns as input, which can overlap with one or more specified columns in the input_schema.

Example

We use the filter(input_schema, output_schema, filter_columns, fn, config=None) interface to create a filter node.

Now let's take an object detection pipeline as an example to demonstrate how to use a filter node. This pipeline detects objects in images, filters out those unidentified objects, and then extracts the feature embedding of each detected object.

from towhee import pipe, ops

obj_filter_embedding = (

pipe.input('url')

.map('url', 'img', ops.image_decode.cv2_rgb())

.map('img', 'obj_res', ops.object_detection.yolo())

.filter(('img', 'obj_res'), ('img', 'obj_res'), 'obj_res', lambda x: len(x) > 0)

.flat_map('obj_res', ('box', 'class', 'score'), lambda x: x)

.flat_map(('img', 'box'), 'object', ops.towhee.image_crop())

.map('object', 'embedding', ops.image_embedding.timm(model_name='resnet50'))

.output('url', 'object', 'class', 'score', 'embedding')

)

data = ['https://towhee.io/object-detection/yolo/raw/branch/main/objects.png', 'https://github.com/towhee-io/towhee/raw/main/assets/towhee_logo_square.png']

res = obj_filter_embedding.batch(data)

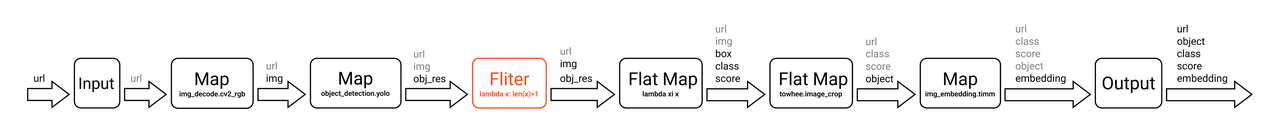

The DAG of the obj_filter_emnedding pipeline is illustrated below.

The data processing workflow of the main nodes is as follows.

- Map: Uses the image-decode/cv2-rgb operator to decode image URLs (

url) into images (img). - Map: Uses the object-detection/yolo operator to extract target objects in images (

img), and returns a list of the information of the target objects (obj_res) in the images. - Filter: Applies the function

lambda x: len(x) > 0to determine if the value ofobj_resis greater than 0. In other words, this process filters out any undetected objects in the images. - Flat map: Applies the

lambda x: xfunction to flatten theobj_resof objects that meet the filtering condition. The output of this node is three columns - position of the detected objects (box), object classification (class), and the score of the objects (score). - Flat map: Uses the towhee/image-crop operator to crop images (

img) and obtain and images of the target objects (object). - Map: Uses the image-embedding/timm operator to extract the features of each object and obtain the corresponding feature embedding (

embedding)of the objects (object) .

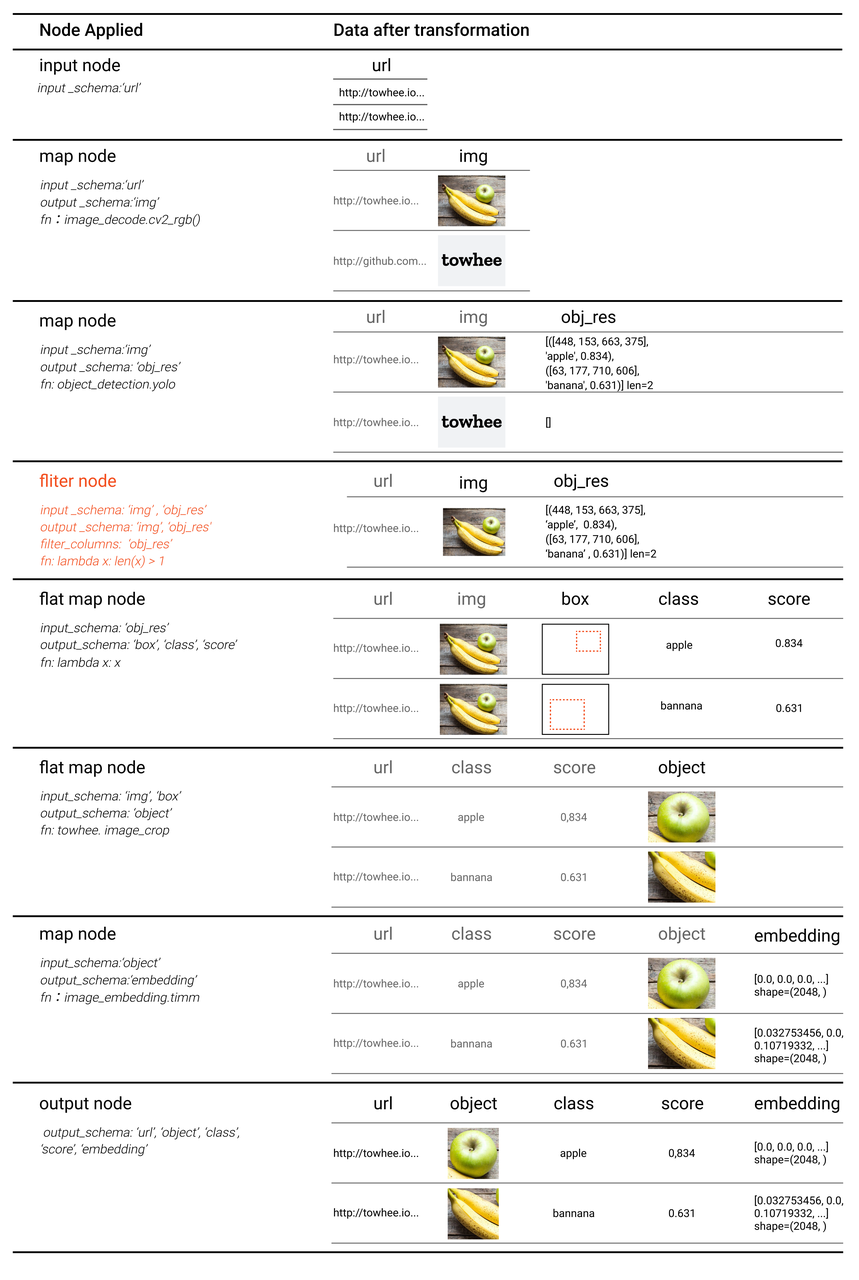

When the pipeline is running, data transformation in each node is illustrated below.

Note:

- The data in the

imgandobjectcolumns are in the format oftowhee.types.Image. These data represent the decoded images. For easier understanding, these data are displayed as images in the following figure.- The data in the

boxcolumn are in the format oflist. These data are coordinates that represent the location of the objects in the images (Eg.[448,153,663,375]). For easier understanding, these data are displayed as the dotted squares in the images.

Notes

- A filter node returns a list of values as either

TrueorFalseafter applying the function. - The

input_schemaand theoutput_schemain a filter node should have the same number of columns.